AI Security Frontlines

We built AI systems assuming “read-only” meant safe and then gave them the power to infer, correlate, and act across our entire organization. In AI security, inference is the new privilege, and most enterprises are dangerously unprepared.

I've spent weeks auditing AI integrations at a cloud security startup. The gap between deployment speed and security frameworks is getting dangerous.

This isn't about theoretical AGI risks. Enterprises spent $37 billion on generative AI in 2025, up 3.2x. 92% of Fortune 500 companies use ChatGPT. 75% of knowledge workers use AI at work. Most security programs haven't adapted.

Traditional AppSec Doesn't Work Here

Every mental model we use for application security breaks when applied to AI systems.

Take Anthropic's Model Context Protocol (MCP) the framework connecting Claude to external data. It looks like standard API integration. It's not. When an LLM has authenticated access to Gmail, Google Drive, and internal databases, you're dealing with an autonomous agent that correlates and acts on data across your environment through natural language.

The old questions don't work. No SQL injection. Input validation is meaningless when "input" is unrestricted natural language. Least privilege? The whole point is connecting disparate systems.

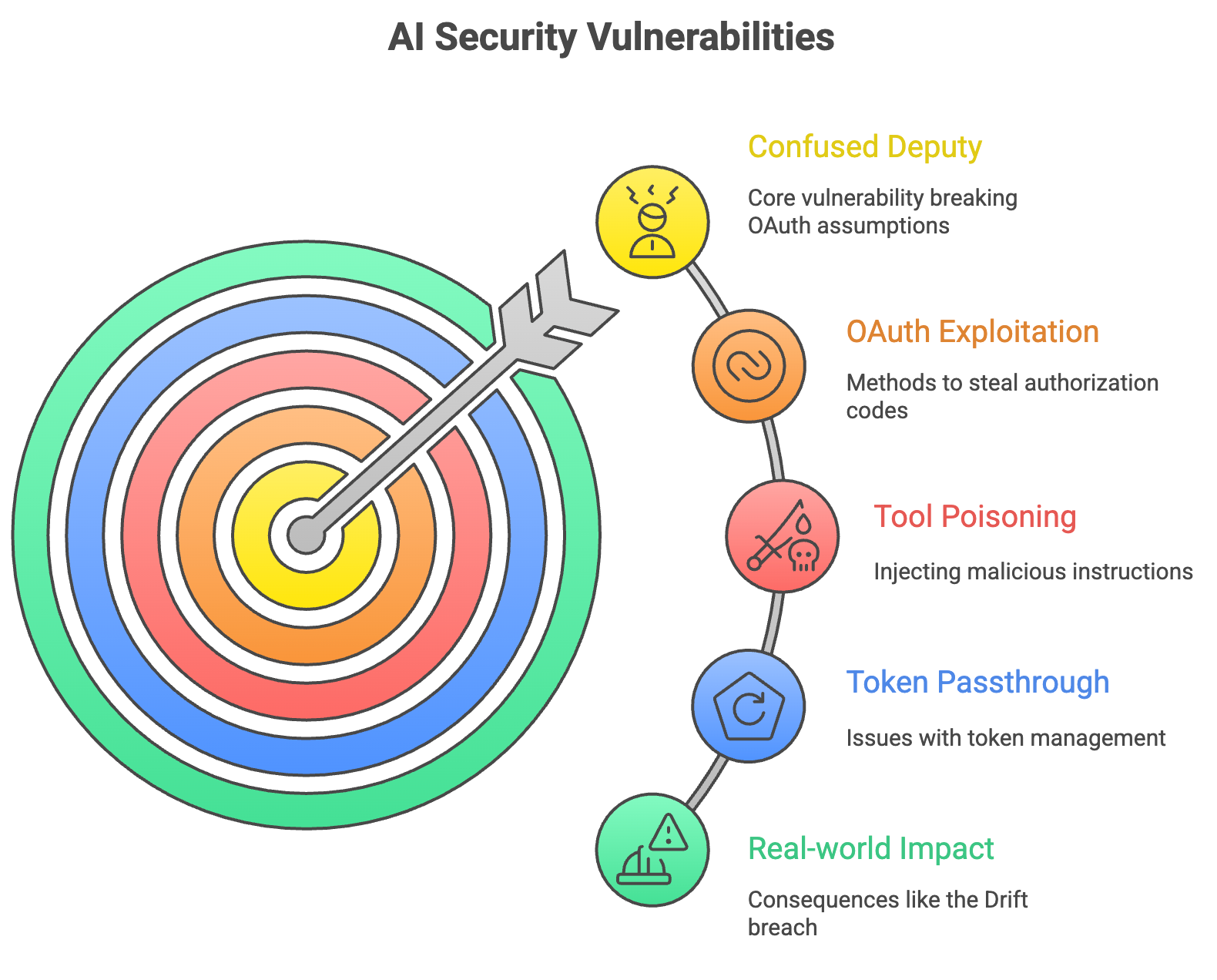

MCP has critical vulnerabilities: confused deputy attacks, exploiting OAuth flows, tool poisoning where malicious instructions hide in tool descriptions, token passthrough issues. Current implementations conflict with enterprise security practices.

The Confused Deputy Problem

The confused deputy is MCP's most dangerous vulnerability; it breaks fundamental OAuth assumptions.

How it works: MCP proxy servers use a single static client ID for all users. When User A authorizes Gmail access, that token lives alongside tokens for Users B and C. Attackers exploit poor session management to steal authorization codes during OAuth flows, then exchange them for access tokens.

The server becomes the "confused deputy" legitimate credentials, but can't validate who's requesting what. Result: Attacker uses the server's valid OAuth token to access User A's Gmail as legitimate API traffic.

Why defenses fail: The server trusts incoming requests because "it's an internal API call." Wrong. When multiple MCP servers connect to one client, a malicious server can inject instructions into tool descriptions that poison calls to legitimate servers. The LLM can't distinguish which instructions to trust.

Real-world impact: This is how the Drift breach scaled to 700+ organizations. Attackers didn't compromise 700 OAuth flows they compromised one integration layer with legitimate tokens for everyone.

Audit checklist: Token audience validation? Per-client consent? Static client IDs without session isolation? If you can't answer these, you're vulnerable.

We need new frameworks now.

The Drift Breach: OAuth Security Failure at Scale

In August 2025, threat actor UNC6395 compromised 700+ organizations through Salesloft's Drift—Cloudflare, Palo Alto Networks, Zscaler, Google, the list goes on.

The attack: GitHub compromise (March-June) → Drift's AWS environment → stolen OAuth tokens with gmail.readonly, drive.readonly, calendar.readonly scopes. August 8-18, systematic data extraction from 700+ Salesforce instances.

Security teams saw "read-only" and didn't flag it. Wrong. When an AI agent has read access to emails, documents, calendars, it has access to what matters. Cloudflare: 104 internal tokens exposed. Attackers used Python scripts and Salesforce Bulk APIs to pull customer contacts, support cases, embedded secrets.

The problem: 2015 mobile app thinking applied to 2025 AI systems. The question isn't "Can this app modify data?" It's "What can an AI agent infer and accomplish?"

Those tokens? Valid indefinitely. Inexcusable.

Action items: Audit OAuth integrations touching AI. Find scope combinations dangerous in aggregate. Implement time-bound tokens.

What Actually Works

Contextual Access Control: Dynamic decisions based on what the AI is doing, not static permissions. We caught our agent pulling customer deployment configs for a billing ticket—without context-aware controls, we'd have leaked infrastructure details.

Comprehensive Logging: Log every interaction prompt, tools called, data accessed, output. Most organizations have zero visibility. You need this when something breaks.

Assume Compromise: What's your blast radius? Network segmentation for AI workloads. Restricted actions even with valid credentials.

Red Team It: Our agent reconstructed our org chart, identified M&A discussions, and mapped our product roadmapall from "read-only" access.

The Vendor Problem

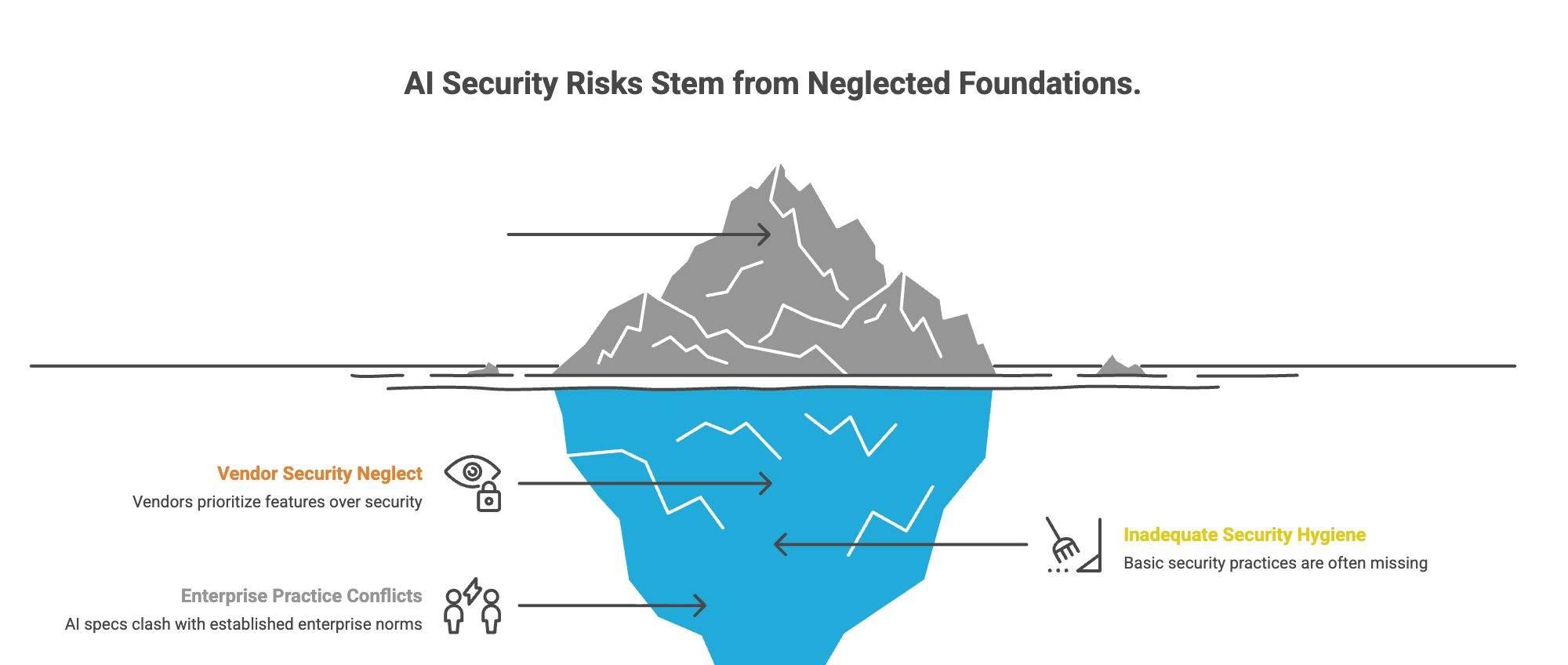

Most AI vendors don't know how to secure at enterprise scale. They're optimizing for features, not security. I reviewed one vendor's security docsragraphs on data governance recommending role-based access control for autonomous agents making thousands of queries.

Microsoft researchers note the MCP spec "conflicts with modern enterprise practices." 98% of breaches would be prevented by basic security hygiene, but we're deploying AI before that baseline exists.

Build your own controls. Set your standards. Share what you learn.

Three Emerging Threats

Prompt Injection: OWASP's #1 LLM risk. Attackers embed malicious instructions in documents the AI processes hidden Unicode, scrambled text, instructions in tool descriptions. No reliable input sanitization exists for natural language. Behavioral monitoring is your only defense.

AI Reconnaissance: Attackers use LLMs to analyze your GitHub repos, job postings, and LinkedIn to map your stack. Assume your public footprint is continuously analyzed.

Training Data Poisoning: We obsess over software supply chain but ignore that models train on unvetted data. No detection mechanisms exist. Rethink vendor risk assessments.

Where We Go From Here

AI security is core infrastructure risk. Your developers use Copilot (50% of developers use AI coding tools daily). Your sales & marketing team uses ChatGPT (800 million weekly users). Your executives connect Claude to company data. This is happening whether you authorized it or not.

We're early enough to get this right. Enterprise AI hit $37 billion in 2025, 76% purchased rather than built. Move faster, experiment more, share failures.