AI Security Is an Architecture Problem, Not a Tools Problem

You can't protect AI agents making thousands of decisions per minute if you can't protect a support portal with a second authentication factor.

The recent announcement that Palo Alto Networks and Google Cloud are expanding their AI security alliance barely made a ripple outside cybersecurity circles. Most executives who noticed it filed it under "vendor partnership news" and moved on.

That's a mistake.

This partnership isn't about two companies joining forces. It's a signal that the security industry has finally admitted something uncomfortable: the way we've been thinking about AI security is fundamentally wrong.

The 99% Statistic in Context

The headline number from the announcement, that 99% of organizations report AI-related attacks, sounds almost too high to be meaningful. When nearly everyone reports something, we tend to dismiss it as noise.

But here's what that number actually reveals: AI hasn't introduced entirely new vulnerabilities. It has amplified the ones you already have.

Consider what happened with the Samsung semiconductor division in 2023. Engineers used ChatGPT to help debug source code and optimize manufacturing processes. In doing so, they inadvertently uploaded proprietary chip designs and internal meeting notes to a third-party AI system. No malicious actor was involved. No sophisticated attack occurred. Engineers simply used a productivity tool the way it was designed to be used, and sensitive intellectual property walked out the door.

This wasn't a security failure in the traditional sense. It was an architecture failure. The organization had no framework for understanding how AI tools would interact with sensitive data flows.

Why Consolidation Alone Won't Save You

The instinct among security vendors and hyperscalers right now is consolidation. Collapse posture management, identity governance, and runtime protection into a single platform. One dashboard. One vendor. One point of accountability.

This sounds appealing. It isn't sufficient.

Look at what happened during the Microsoft Midnight Blizzard breach that came to light in early 2024. Russian state actors compromised a legacy test tenant account, then used OAuth applications to move laterally into Microsoft's corporate email systems. The attackers accessed email accounts of senior leadership and cybersecurity staff for months.

Microsoft has arguably the most sophisticated security tooling on the planet. They had visibility. What they lacked was real-time comprehension of how identity relationships and OAuth permissions were being exploited across interconnected systems.

Now multiply that complexity by every AI agent your organization deploys. Each agent operates with some identity, accesses some set of data, and takes actions on behalf of some user or process. Traditional security monitoring watches for known bad patterns. AI agents create entirely new patterns every time they run.

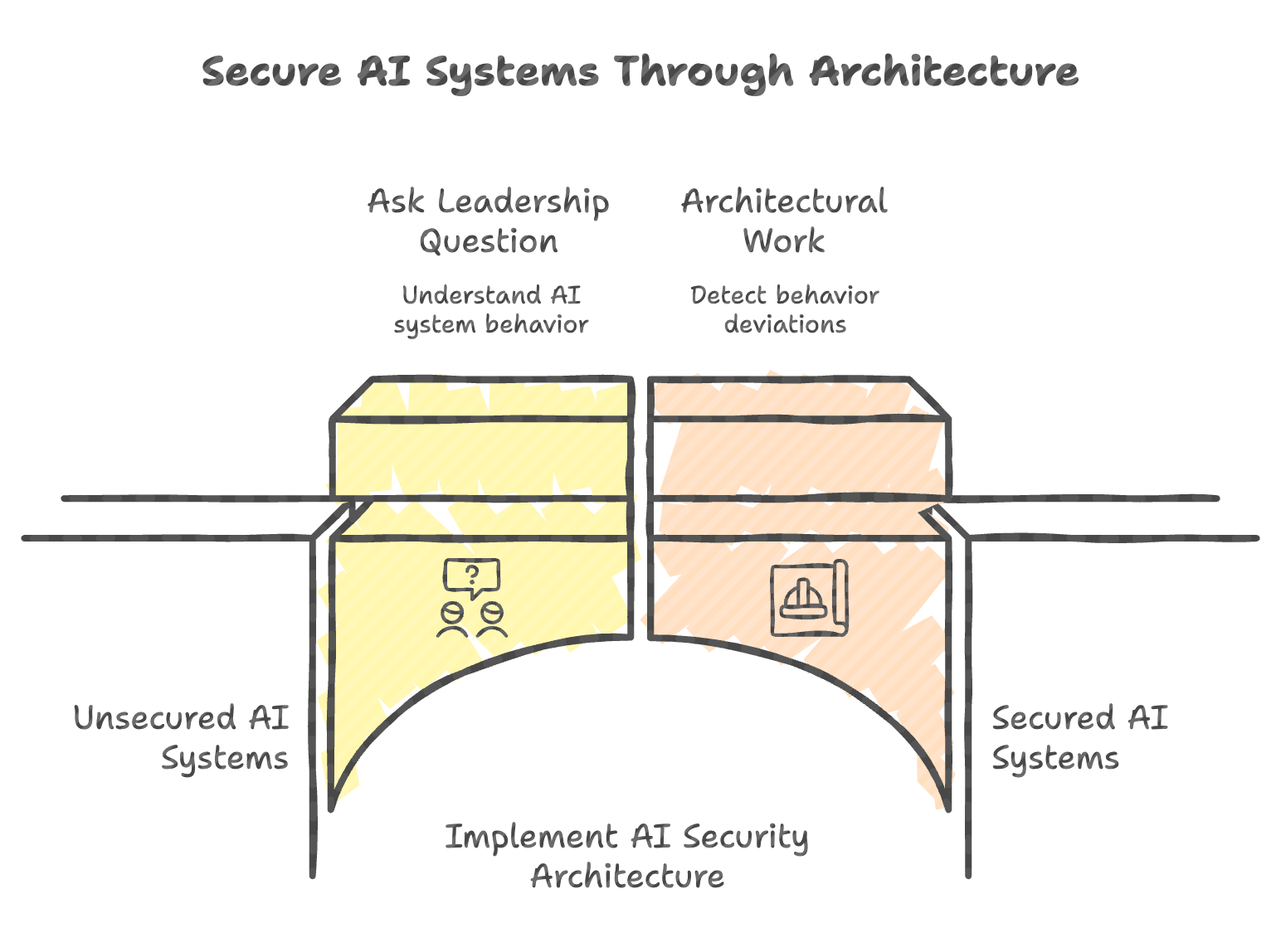

The Architecture Imperative

This is why AI security is becoming an architecture decision, not a procurement decision.

Shopify learned this the hard way when they discovered in 2023 that customer service AI tools could be manipulated through carefully crafted customer messages to reveal information about other customers' orders. The AI was doing exactly what it was designed to do: be helpful and answer questions. The architecture simply didn't account for the fact that "being helpful" could mean leaking data across customer boundaries.

You cannot bolt security onto AI systems after deployment and expect meaningful protection. The attack surface of an AI agent isn't a perimeter you can defend. It's a web of relationships between the agent and the data it can access, between the agent and the systems it can invoke, between the agent and the identity it assumes.

Securing that web requires building security into the architecture from the beginning. It requires designing systems where AI agents operate with least privilege by default, where every action is attributable, where behavioral baselines are established before production deployment.

What This Means for Decision Makers

If you're evaluating AI initiatives, here's the honest reality: your security team probably can't tell you whether your current architecture can support AI safely. Not because they're incompetent, but because the tools and frameworks to answer that question are still maturing.

Start by asking your security and engineering leadership a direct question: Do we understand how our AI systems behave at runtime, and can we detect when that behavior deviates from what we expect?

At most organizations, the answer will be some version of "partially" or "we're working on it." That's fine. What matters is whether you're treating this as an architectural priority or hoping your existing security stack will somehow handle it.

The companies that get AI security architecture right won't necessarily be the most cautious adopters. They'll be the ones who can deploy AI more aggressively because they've built the foundations to do it safely. That's the real competitive advantage here, not avoiding AI, but being able to use it faster and more confidently than organizations that are constantly dealing with incidents.

The Palo Alto-Google Cloud partnership is a leading indicator. The question is whether your organization will be ahead of the shift or scrambling to catch up after something goes wrong.